省流

这篇文章以seatunnel 2.3.6版本,讲解了一个zeta引擎的一个任务是如何从提交到运行的全流程,希望通过这篇文档,对刚刚上手seatunnel的朋友提供一些帮助。

这篇文章将从

- seatunnel server端的初始化

- client端的任务提交流程

- server端的接收到任务的执行流程

三部分来记录下一个任务的整体流程

参考

集群拓扑

首先从整体了解下SeaTunnel的Zeta引擎架构, SeaTunnel是基于hazelcast来实现的分布式集群通信

在2.3.6版本之后, 集群中的节点可以被分配为master或worker节点, 从而将调度与执行分开, 避免master节点的负载过高从而出现问题。

并且2.3.6版本还添加了一个功能是, 可以对每个节点添加tag属性, 当提交任务时可以通过tag来选择任务将要运行的节点, 从而达到资源隔离的目的(2.3.6版本有问题,2.3.8版本中进行了修复,如果需要使用此功能请使用2.3.8版本)。

集群的服务端分为master和worker节点, master节点负责接收请求, 逻辑计划生成, 分配任务等(与之前的版本相比,会多了几个backup节点,但是对于集群稳定性来说是一个挺大的提升)。

而worker节点则只负责执行任务, 也就是数据的读取和写入。

提交任务时可以创建hazelcast的客户端连接集群来进行通信, 或者使用restapi来进行通信。

服务端启动

当我们对集群的整体架构有个大致的了解后, 我们再来具体了解下具体的流程

首先看下server端的启动过程。 server端的启动命令为:

sh bin/seatunnel-cluster.sh -d -r <node role type>

当我们查看这个脚本的内容后就会发现, 这个脚本最终的执行命令为:

1

|

java -cp seatunnel-starter.jar org.apache.seatunnel.core.starter.seatunnel.SeaTunnelServer <other_java_jvm_config_and_args>

|

我们查看这个starter.seatunnel.SeaTunnelServer的代码

1

2

3

4

5

6

7

8

9

10

11

|

public class SeaTunnelServer {

public static void main(String[] args) throws CommandException {

ServerCommandArgs serverCommandArgs =

CommandLineUtils.parse(

args,

new ServerCommandArgs(),

EngineType.SEATUNNEL.getStarterShellName(),

true);

SeaTunnel.run(serverCommandArgs.buildCommand());

}

}

|

这个代码是使用了JCommander来解析用户传递的参数并构建并运行Command, serverCommandArgs.buildCommand返回的类为:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

|

public class ServerExecuteCommand implements Command<ServerCommandArgs> {

private final ServerCommandArgs serverCommandArgs;

public ServerExecuteCommand(ServerCommandArgs serverCommandArgs) {

this.serverCommandArgs = serverCommandArgs;

}

@Override

public void execute() {

SeaTunnelConfig seaTunnelConfig = ConfigProvider.locateAndGetSeaTunnelConfig();

String clusterRole = this.serverCommandArgs.getClusterRole();

if (StringUtils.isNotBlank(clusterRole)) {

if (EngineConfig.ClusterRole.MASTER.toString().equalsIgnoreCase(clusterRole)) {

seaTunnelConfig.getEngineConfig().setClusterRole(EngineConfig.ClusterRole.MASTER);

} else if (EngineConfig.ClusterRole.WORKER.toString().equalsIgnoreCase(clusterRole)) {

seaTunnelConfig.getEngineConfig().setClusterRole(EngineConfig.ClusterRole.WORKER);

// in hazelcast lite node will not store IMap data.

seaTunnelConfig.getHazelcastConfig().setLiteMember(true);

} else {

throw new SeaTunnelEngineException("Not supported cluster role: " + clusterRole);

}

} else {

seaTunnelConfig

.getEngineConfig()

.setClusterRole(EngineConfig.ClusterRole.MASTER_AND_WORKER);

}

HazelcastInstanceFactory.newHazelcastInstance(

seaTunnelConfig.getHazelcastConfig(),

Thread.currentThread().getName(),

new SeaTunnelNodeContext(seaTunnelConfig));

}

}

|

在这里会根据配置的角色类型来修改配置信息。 当是worker节点时,将hazelcast节点的类型设置为lite member,在hazelcast中lite member是不进行数据存储的

然后会创建了一个hazelcast实例, 并且传递了SeaTunnelNodeContext实例以及读取并修改的配置信息

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

|

public class SeaTunnelNodeContext extends DefaultNodeContext {

private final SeaTunnelConfig seaTunnelConfig;

public SeaTunnelNodeContext(@NonNull SeaTunnelConfig seaTunnelConfig) {

this.seaTunnelConfig = seaTunnelConfig;

}

@Override

public NodeExtension createNodeExtension(@NonNull Node node) {

return new org.apache.seatunnel.engine.server.NodeExtension(node, seaTunnelConfig);

}

@Override

public Joiner createJoiner(Node node) {

JoinConfig join =

getActiveMemberNetworkConfig(seaTunnelConfig.getHazelcastConfig()).getJoin();

join.verify();

if (node.shouldUseMulticastJoiner(join) && node.multicastService != null) {

super.createJoiner(node);

} else if (join.getTcpIpConfig().isEnabled()) {

log.info("Using LiteNodeDropOutTcpIpJoiner TCP/IP discovery");

return new LiteNodeDropOutTcpIpJoiner(node);

} else if (node.getProperties().getBoolean(DISCOVERY_SPI_ENABLED)

|| isAnyAliasedConfigEnabled(join)

|| join.isAutoDetectionEnabled()) {

super.createJoiner(node);

}

return null;

}

private static boolean isAnyAliasedConfigEnabled(JoinConfig join) {

return !AliasedDiscoveryConfigUtils.createDiscoveryStrategyConfigs(join).isEmpty();

}

private boolean usePublicAddress(JoinConfig join, Node node) {

return node.getProperties().getBoolean(DISCOVERY_SPI_PUBLIC_IP_ENABLED)

|| allUsePublicAddress(

AliasedDiscoveryConfigUtils.aliasedDiscoveryConfigsFrom(join));

}

}

|

在SeaTunnelNodeContext中覆盖了createNodeExtension方法, 将使用engine.server.NodeExtension类, 这个类的代码为:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

|

public class NodeExtension extends DefaultNodeExtension {

private final NodeExtensionCommon extCommon;

public NodeExtension(@NonNull Node node, @NonNull SeaTunnelConfig seaTunnelConfig) {

super(node);

extCommon = new NodeExtensionCommon(node, new SeaTunnelServer(seaTunnelConfig));

}

@Override

public void beforeStart() {

// TODO Get Config from Node here

super.beforeStart();

}

@Override

public void afterStart() {

super.afterStart();

extCommon.afterStart();

}

@Override

public void beforeClusterStateChange(

ClusterState currState, ClusterState requestedState, boolean isTransient) {

super.beforeClusterStateChange(currState, requestedState, isTransient);

extCommon.beforeClusterStateChange(requestedState);

}

@Override

public void onClusterStateChange(ClusterState newState, boolean isTransient) {

super.onClusterStateChange(newState, isTransient);

extCommon.onClusterStateChange(newState);

}

@Override

public Map<String, Object> createExtensionServices() {

return extCommon.createExtensionServices();

}

@Override

public TextCommandService createTextCommandService() {

return new TextCommandServiceImpl(node) {

{

register(HTTP_GET, new Log4j2HttpGetCommandProcessor(this));

register(HTTP_POST, new Log4j2HttpPostCommandProcessor(this));

register(HTTP_GET, new RestHttpGetCommandProcessor(this));

register(HTTP_POST, new RestHttpPostCommandProcessor(this));

}

};

}

@Override

public void printNodeInfo() {

extCommon.printNodeInfo(systemLogger);

}

}

|

在这个代码中, 我们可以看到在构造方法中, 初始化了SeaTunnelServer这个类, 而这个类与最开始的类是同名的, 但是在不同的包下, 这个类的完整类名为: org.apache.seatunnel.engine.server.SeaTunnelServer

我们看下这个类的代码:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

|

public class SeaTunnelServer

implements ManagedService, MembershipAwareService, LiveOperationsTracker {

private static final ILogger LOGGER = Logger.getLogger(SeaTunnelServer.class);

public static final String SERVICE_NAME = "st:impl:seaTunnelServer";

private NodeEngineImpl nodeEngine;

private final LiveOperationRegistry liveOperationRegistry;

private volatile SlotService slotService;

private TaskExecutionService taskExecutionService;

private ClassLoaderService classLoaderService;

private CoordinatorService coordinatorService;

private ScheduledExecutorService monitorService;

@Getter private SeaTunnelHealthMonitor seaTunnelHealthMonitor;

private final SeaTunnelConfig seaTunnelConfig;

private volatile boolean isRunning = true;

public SeaTunnelServer(@NonNull SeaTunnelConfig seaTunnelConfig) {

this.liveOperationRegistry = new LiveOperationRegistry();

this.seaTunnelConfig = seaTunnelConfig;

LOGGER.info("SeaTunnel server start...");

}

@Override

public void init(NodeEngine engine, Properties hzProperties) {

...

if (EngineConfig.ClusterRole.MASTER_AND_WORKER.ordinal()

== seaTunnelConfig.getEngineConfig().getClusterRole().ordinal()) {

startWorker();

startMaster();

} else if (EngineConfig.ClusterRole.WORKER.ordinal()

== seaTunnelConfig.getEngineConfig().getClusterRole().ordinal()) {

startWorker();

} else {

startMaster();

}

...

}

....

}

|

这个类是SeaTunnel Server端的核心代码, 在这个类中会根据节点的角色来启动相关的组件。

稍微总结下seatunnel的流程:

SeaTunnel是借助于hazelcast的基础能力, 来实现集群端的组网, 并调用启动核心的代码。对于这一块有想深入了解的朋友可以去看下hazelcast的相关内容,这里仅仅列出了调用路径。

按照顺序所加载调用的类为

- starter.SeaTunnelServer

- ServerExecutreCommand

- SeaTunnelNodeContext

- NodeExtension

- server.SeaTunnelServer

接下来再来详细看下master节点以及worker节点中所创建的组件

master相关组件

1

2

3

4

5

6

7

8

9

10

|

private void startMaster() {

coordinatorService =

new CoordinatorService(nodeEngine, this, seaTunnelConfig.getEngineConfig());

monitorService = Executors.newSingleThreadScheduledExecutor();

monitorService.scheduleAtFixedRate(

this::printExecutionInfo,

0,

seaTunnelConfig.getEngineConfig().getPrintExecutionInfoInterval(),

TimeUnit.SECONDS);

}

|

在这个方法内,可以看到一个初始化了两个组件

coordinatorService 协调器组件monitorService 监控组件

监控组件所做的事情,在这个方法内,也可以看到,就是周期性的打印信息。

CoordinatorService

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

|

public CoordinatorService(

@NonNull NodeEngineImpl nodeEngine,

@NonNull SeaTunnelServer seaTunnelServer,

EngineConfig engineConfig) {

this.nodeEngine = nodeEngine;

this.logger = nodeEngine.getLogger(getClass());

this.executorService =

Executors.newCachedThreadPool(

new ThreadFactoryBuilder()

.setNameFormat("seatunnel-coordinator-service-%d")

.build());

this.seaTunnelServer = seaTunnelServer;

this.engineConfig = engineConfig;

masterActiveListener = Executors.newSingleThreadScheduledExecutor();

masterActiveListener.scheduleAtFixedRate(

this::checkNewActiveMaster, 0, 100, TimeUnit.MILLISECONDS);

}

private void checkNewActiveMaster() {

try {

if (!isActive && this.seaTunnelServer.isMasterNode()) {

logger.info(

"This node become a new active master node, begin init coordinator service");

if (this.executorService.isShutdown()) {

this.executorService =

Executors.newCachedThreadPool(

new ThreadFactoryBuilder()

.setNameFormat("seatunnel-coordinator-service-%d")

.build());

}

initCoordinatorService();

isActive = true;

} else if (isActive && !this.seaTunnelServer.isMasterNode()) {

isActive = false;

logger.info(

"This node become leave active master node, begin clear coordinator service");

clearCoordinatorService();

}

} catch (Exception e) {

isActive = false;

logger.severe(ExceptionUtils.getMessage(e));

throw new SeaTunnelEngineException("check new active master error, stop loop", e);

}

}

|

协调器组件启动之后,会创建一个线程定时检测自身与集群状态,检查是否需要进行切换。

状态检查有两种

- 当本地标记不是master,但在hazelcast集群中被选举为master时

调用

initCoordinatorService()来进行状态的初始化,同时修改本地状态标记信息

- 当本地标记自身为master,但是在集群中已经不是master时

状态清理

我们看下initCoordinatorService()方法

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

|

private void initCoordinatorService() {

// 从hazelcast中获取分布式IMAP

runningJobInfoIMap =

nodeEngine.getHazelcastInstance().getMap(Constant.IMAP_RUNNING_JOB_INFO);

runningJobStateIMap =

nodeEngine.getHazelcastInstance().getMap(Constant.IMAP_RUNNING_JOB_STATE);

runningJobStateTimestampsIMap =

nodeEngine.getHazelcastInstance().getMap(Constant.IMAP_STATE_TIMESTAMPS);

ownedSlotProfilesIMap =

nodeEngine.getHazelcastInstance().getMap(Constant.IMAP_OWNED_SLOT_PROFILES);

metricsImap = nodeEngine.getHazelcastInstance().getMap(Constant.IMAP_RUNNING_JOB_METRICS);

// 初始化JobHistoryService

jobHistoryService =

new JobHistoryService(

runningJobStateIMap,

logger,

runningJobMasterMap,

nodeEngine.getHazelcastInstance().getMap(Constant.IMAP_FINISHED_JOB_STATE),

nodeEngine

.getHazelcastInstance()

.getMap(Constant.IMAP_FINISHED_JOB_METRICS),

nodeEngine

.getHazelcastInstance()

.getMap(Constant.IMAP_FINISHED_JOB_VERTEX_INFO),

engineConfig.getHistoryJobExpireMinutes());

// 初始化EventProcess, 用于发送事件到其他服务

eventProcessor =

createJobEventProcessor(

engineConfig.getEventReportHttpApi(),

engineConfig.getEventReportHttpHeaders(),

nodeEngine);

// If the user has configured the connector package service, create it on the master node.

ConnectorJarStorageConfig connectorJarStorageConfig =

engineConfig.getConnectorJarStorageConfig();

if (connectorJarStorageConfig.getEnable()) {

connectorPackageService = new ConnectorPackageService(seaTunnelServer);

}

// 集群恢复后, 尝试恢复之前的历史任务

restoreAllJobFromMasterNodeSwitchFuture =

new PassiveCompletableFuture(

CompletableFuture.runAsync(

this::restoreAllRunningJobFromMasterNodeSwitch, executorService));

}

|

在coordinatorservice中, 会拉取分布式MAP, 这个数据结构是hazelcast的一个数据结构, 可以认为是在集群中数据一致的一个MAP。 在seatunnel中, 使用这个结构来存储任务信息, slot信息等。

在这里还会创建EventProcessor, 这个类是用来将事件通知到其他服务, 比如任务失败, 可以发送信息到配置的接口中, 实现事件推送。

最后, 由于节点启动, 可能是集群异常重启, 或者节点切换, 这时需要恢复历史运行的任务, 那么就会从刚刚获取到的IMAP中获取到之前正在跑的任务列表, 然后尝试进行恢复。

这里的IMAP信息可以开启持久化将信息存储到HDFS等文件系统中, 这样可以在系统完全重启后仍然能够读取到之前的任务状态并进行恢复。

在CoordinatorService中运行的组件有:

- executorService (所有可能被选举为master的节点)

- masterActiveListener(所有可能被选举为master的节点)

- jobHistoryService (master节点)

- eventProcessor (master节点)

– TODO 修改图片,添加executorService

接下来再看下worker节点所启动的组件

– TODO 修改图片,添加executorService

接下来再看下worker节点所启动的组件

worker节点

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

|

private void startWorker() {

taskExecutionService =

new TaskExecutionService(

classLoaderService, nodeEngine, nodeEngine.getProperties());

nodeEngine.getMetricsRegistry().registerDynamicMetricsProvider(taskExecutionService);

taskExecutionService.start();

getSlotService();

}

public SlotService getSlotService() {

if (slotService == null) {

synchronized (this) {

if (slotService == null) {

SlotService service =

new DefaultSlotService(

nodeEngine,

taskExecutionService,

seaTunnelConfig.getEngineConfig().getSlotServiceConfig());

service.init();

slotService = service;

}

}

}

return slotService;

}

|

我们可以看到在startWorker方法中, 也会初始化两个组件

taskExecutionService 任务执行线程池slotService 任务资源管理

SlotService

先来看下SlotService的初始化

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

|

@Override

public void init() {

initStatus = true;

slotServiceSequence = UUID.randomUUID().toString();

contexts = new ConcurrentHashMap<>();

assignedSlots = new ConcurrentHashMap<>();

unassignedSlots = new ConcurrentHashMap<>();

unassignedResource = new AtomicReference<>(new ResourceProfile());

assignedResource = new AtomicReference<>(new ResourceProfile());

scheduledExecutorService =

Executors.newSingleThreadScheduledExecutor(

r ->

new Thread(

r,

String.format(

"hz.%s.seaTunnel.slotService.thread",

nodeEngine.getHazelcastInstance().getName())));

if (!config.isDynamicSlot()) {

initFixedSlots();

}

unassignedResource.set(getNodeResource());

scheduledExecutorService.scheduleAtFixedRate(

() -> {

try {

LOGGER.fine(

"start send heartbeat to resource manager, this address: "

+ nodeEngine.getClusterService().getThisAddress());

sendToMaster(new WorkerHeartbeatOperation(getWorkerProfile())).join();

} catch (Exception e) {

LOGGER.warning(

"failed send heartbeat to resource manager, will retry later. this address: "

+ nodeEngine.getClusterService().getThisAddress());

}

},

0,

DEFAULT_HEARTBEAT_TIMEOUT,

TimeUnit.MILLISECONDS);

}

|

在SeaTunnel中,会有一个动态slot(DynamicSlot) 的概念。

如果设置为true, 则每个节点不再有Slot数量的限制,可以提交任意数量的任务到此节点上。

如果设置为固定数量的slot, 那么该节点仅能接受这些slot数量的任务运行。

两者的区别在于你的同步使用场景,

如果你的任务都是大数据量的同步任务,那么最好可以设置成固定数量的Slot,避免提交过多任务影响单个节点的稳定性

但是如果每个任务的数据量都很少,其实可以选择动态Slot,这样可以更大限度的提升资源使用率

在初始化时, 会根据是否为动态slot来进行slot数量的初始化

1

2

3

4

5

6

7

8

9

10

11

12

13

|

private void initFixedSlots() {

long maxMemory = Runtime.getRuntime().maxMemory();

for (int i = 0; i < config.getSlotNum(); i++) {

unassignedSlots.put(

i,

new SlotProfile(

nodeEngine.getThisAddress(),

i,

new ResourceProfile(

CPU.of(0), Memory.of(maxMemory / config.getSlotNum())),

slotServiceSequence));

}

}

|

同时我们也可以看到初始化时会启动一个线程, 定时向master节点发送心跳, 心跳信息中则包含了当前节点的信息, 包括已经分配的, 未分配的slot数量等属性,worker节点通过心跳将信息定时更新给master。

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

|

@Override

public synchronized WorkerProfile getWorkerProfile() {

WorkerProfile workerProfile = new WorkerProfile(nodeEngine.getThisAddress());

workerProfile.setProfile(getNodeResource());

workerProfile.setAssignedSlots(assignedSlots.values().toArray(new SlotProfile[0]));

workerProfile.setUnassignedSlots(unassignedSlots.values().toArray(new SlotProfile[0]));

workerProfile.setUnassignedResource(unassignedResource.get());

workerProfile.setAttributes(nodeEngine.getLocalMember().getAttributes());

workerProfile.setDynamicSlot(config.isDynamicSlot());

return workerProfile;

}

private ResourceProfile getNodeResource() {

return new ResourceProfile(CPU.of(0), Memory.of(Runtime.getRuntime().maxMemory()));

}

|

TaskExecutionService

这个组件与任务提交相关, 这里先简单看下,与任务提交的相关代码在后续再深入查看。

在worker节点初始化时, 会新建一个TaskExecutionService对象,并调用其start方法

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

|

private final ExecutorService executorService =

newCachedThreadPool(new BlockingTaskThreadFactory());

public TaskExecutionService(

ClassLoaderService classLoaderService,

NodeEngineImpl nodeEngine,

HazelcastProperties properties) {

// 加载配置信息

seaTunnelConfig = ConfigProvider.locateAndGetSeaTunnelConfig();

this.hzInstanceName = nodeEngine.getHazelcastInstance().getName();

this.nodeEngine = nodeEngine;

this.classLoaderService = classLoaderService;

this.logger = nodeEngine.getLoggingService().getLogger(TaskExecutionService.class);

// 指标相关

MetricsRegistry registry = nodeEngine.getMetricsRegistry();

MetricDescriptor descriptor =

registry.newMetricDescriptor()

.withTag(MetricTags.SERVICE, this.getClass().getSimpleName());

registry.registerStaticMetrics(descriptor, this);

scheduledExecutorService = Executors.newSingleThreadScheduledExecutor();

// 定时任务更新指标到IMAP中

scheduledExecutorService.scheduleAtFixedRate(

this::updateMetricsContextInImap,

0,

seaTunnelConfig.getEngineConfig().getJobMetricsBackupInterval(),

TimeUnit.SECONDS);

serverConnectorPackageClient =

new ServerConnectorPackageClient(nodeEngine, seaTunnelConfig);

eventBuffer = new ArrayBlockingQueue<>(2048);

// 事件转发服务

eventForwardService =

Executors.newSingleThreadExecutor(

new ThreadFactoryBuilder().setNameFormat("event-forwarder-%d").build());

eventForwardService.submit(

() -> {

List<Event> events = new ArrayList<>();

RetryUtils.RetryMaterial retryMaterial =

new RetryUtils.RetryMaterial(2, true, e -> true);

while (!Thread.currentThread().isInterrupted()) {

try {

events.clear();

Event first = eventBuffer.take();

events.add(first);

eventBuffer.drainTo(events, 500);

JobEventReportOperation operation = new JobEventReportOperation(events);

RetryUtils.retryWithException(

() ->

NodeEngineUtil.sendOperationToMasterNode(

nodeEngine, operation)

.join(),

retryMaterial);

logger.fine("Event forward success, events " + events.size());

} catch (InterruptedException e) {

Thread.currentThread().interrupt();

logger.info("Event forward thread interrupted");

} catch (Throwable t) {

logger.warning(

"Event forward failed, discard events " + events.size(), t);

}

}

});

}

public void start() {

runBusWorkSupplier.runNewBusWork(false);

}

|

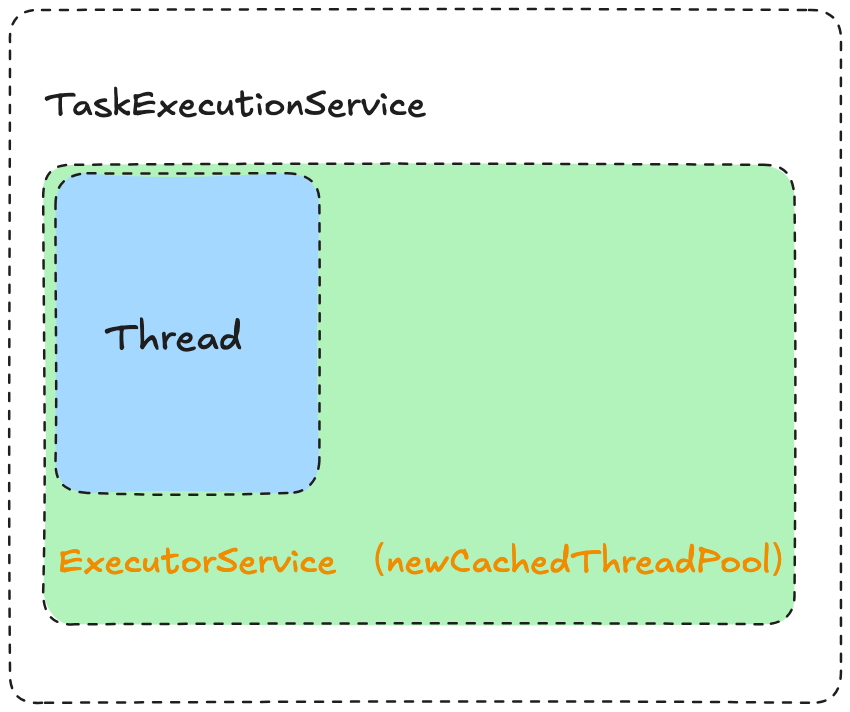

在这个类中,有一个成员变量,创建了一个线程池,该线程池为CachedThreadPool,没有大小限制。

在构造方法中构建了一个定时任务,这个任务会将本地执行的一些指标信息更新到IMAP中。

同时创建了一个任务来将Event信息发送给master节点, 由master节点再将这些Event发送给外部服务。

至此, 服务端所有服务组件都已启动完成

master节点与备选节点上会

- 定时检查自己是否为master节点, 如果是则进行相应的状态转化

master节点上会

- 定时打印集群的状态信息。

- 启动转发服务, 将要推送的事件转发到外部服务

在worker节点上, 启动后会

- 定时将状态信息上报到master节点

- 将任务信息更新到IMAP里面。

- 将在worker产生的要推送给外部服务的事件转发到master节点上。

下面我们会再从一个简单的任务开始, 从客户端看下任务的提交流程。

客户端提交任务

这里以命令行提交任务的形式来讲解任务的提交流程。

命令行提交任务的命令为

./bin/seatunnel/sh -c <config_path>

我们查看这个脚本文件后可以看到这个脚本中最后会调用org.apache.seatunnel.core.starter.seatunnel.SeaTunnelClient这个类

1

2

3

4

5

6

7

8

9

10

11

|

public class SeaTunnelClient {

public static void main(String[] args) throws CommandException {

ClientCommandArgs clientCommandArgs =

CommandLineUtils.parse(

args,

new ClientCommandArgs(),

EngineType.SEATUNNEL.getStarterShellName(),

true);

SeaTunnel.run(clientCommandArgs.buildCommand());

}

}

|

在这个类中,仅有一个main方法, 与上面的server端的代码类似,不过这里构建的是ClientCommandArgs

解析命令行参数

我们查看clientCommandArgs.buildCommand方法

1

2

3

4

5

6

7

8

9

10

11

12

13

|

public Command<?> buildCommand() {

Common.setDeployMode(getDeployMode());

if (checkConfig) {

return new SeaTunnelConfValidateCommand(this);

}

if (encrypt) {

return new ConfEncryptCommand(this);

}

if (decrypt) {

return new ConfDecryptCommand(this);

}

return new ClientExecuteCommand(this);

}

|

这里是调用了jcommander来解析参数, 会根据用户传递的参数来决定构建哪个类, 例如是对配置文件做检查,还是加密文件,解密文件以及是不是Client提交任务的命令。

这里就不再讲解其他几个类,主要来看下ClientExecuteCommand

这个类的主要代码都在execute方法中, 整体方法比较长, 我将分段来描述每一段的作业

连接集群

在这一部分代码中, 做的事情是读取hazelcast-client.yaml文件,尝试建立与server端的连接, 当使用local模式时,会现在本地创建一个hazelcast的实例, 然后连接到这个实例上, 当使用cluster模式时, 则直接连接到集群上。

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

|

public void execute() throws CommandExecuteException {

JobMetricsRunner.JobMetricsSummary jobMetricsSummary = null;

LocalDateTime startTime = LocalDateTime.now();

LocalDateTime endTime = LocalDateTime.now();

SeaTunnelConfig seaTunnelConfig = ConfigProvider.locateAndGetSeaTunnelConfig();

try {

String clusterName = clientCommandArgs.getClusterName();

// 加载配置信息

ClientConfig clientConfig = ConfigProvider.locateAndGetClientConfig();

// 根据此次提交的任务类型,当使用local模式时,意味着上面服务端的流程是没有执行的,

// 所以先创建一个本地seatunnel server

if (clientCommandArgs.getMasterType().equals(MasterType.LOCAL)) {

clusterName =

creatRandomClusterName(

StringUtils.isNotEmpty(clusterName)

? clusterName

: Constant.DEFAULT_SEATUNNEL_CLUSTER_NAME);

instance = createServerInLocal(clusterName, seaTunnelConfig);

int port = instance.getCluster().getLocalMember().getSocketAddress().getPort();

clientConfig

.getNetworkConfig()

.setAddresses(Collections.singletonList("localhost:" + port));

}

// 与远程或本地的seatunnel server连接,创建一个engineClient

if (StringUtils.isNotEmpty(clusterName)) {

seaTunnelConfig.getHazelcastConfig().setClusterName(clusterName);

clientConfig.setClusterName(clusterName);

}

engineClient = new SeaTunnelClient(clientConfig);

// 省略第二段代码

// 省略第三段代码

}

} catch (Exception e) {

throw new CommandExecuteException("SeaTunnel job executed failed", e);

} finally {

if (jobMetricsSummary != null) {

// 任务结束,打印日志

log.info(

StringFormatUtils.formatTable(

"Job Statistic Information",

"Start Time",

DateTimeUtils.toString(

startTime, DateTimeUtils.Formatter.YYYY_MM_DD_HH_MM_SS),

"End Time",

DateTimeUtils.toString(

endTime, DateTimeUtils.Formatter.YYYY_MM_DD_HH_MM_SS),

"Total Time(s)",

Duration.between(startTime, endTime).getSeconds(),

"Total Read Count",

jobMetricsSummary.getSourceReadCount(),

"Total Write Count",

jobMetricsSummary.getSinkWriteCount(),

"Total Failed Count",

jobMetricsSummary.getSourceReadCount()

- jobMetricsSummary.getSinkWriteCount()));

}

closeClient();

}

}

|

判断任务类型,调用相关方法

则是根据用户的参数来判断这次的任务类型是什么, 根据参数的不同,调用不同的方法, 例如取消任务, 则会调用相应的取消任务方法, 这次对这里的几个任务不再具体分析, 这次以提交任务为主, 当我们将提交任务的流程弄明白, 这些再去看时也就简单了。

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

|

if (clientCommandArgs.isListJob()) {

String jobStatus = engineClient.getJobClient().listJobStatus(true);

System.out.println(jobStatus);

} else if (clientCommandArgs.isGetRunningJobMetrics()) {

String runningJobMetrics = engineClient.getJobClient().getRunningJobMetrics();

System.out.println(runningJobMetrics);

} else if (null != clientCommandArgs.getJobId()) {

String jobState =

engineClient

.getJobClient()

.getJobDetailStatus(Long.parseLong(clientCommandArgs.getJobId()));

System.out.println(jobState);

} else if (null != clientCommandArgs.getCancelJobId()) {

engineClient

.getJobClient()

.cancelJob(Long.parseLong(clientCommandArgs.getCancelJobId()));

} else if (null != clientCommandArgs.getMetricsJobId()) {

String jobMetrics =

engineClient

.getJobClient()

.getJobMetrics(Long.parseLong(clientCommandArgs.getMetricsJobId()));

System.out.println(jobMetrics);

} else if (null != clientCommandArgs.getSavePointJobId()) {

engineClient

.getJobClient()

.savePointJob(Long.parseLong(clientCommandArgs.getSavePointJobId()));

} else {

// 省略第三段代码

}

|

提交任务到集群

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

|

// 获取配置文件的路径, 并检查文件是否存在

Path configFile = FileUtils.getConfigPath(clientCommandArgs);

checkConfigExist(configFile);

JobConfig jobConfig = new JobConfig();

// 下面会根据这次任务是根据savepoint重启还是启动新任务来调用不同的方法来构建ClientJobExecutionEnvironment对象

ClientJobExecutionEnvironment jobExecutionEnv;

jobConfig.setName(clientCommandArgs.getJobName());

if (null != clientCommandArgs.getRestoreJobId()) {

jobExecutionEnv =

engineClient.restoreExecutionContext(

configFile.toString(),

clientCommandArgs.getVariables(),

jobConfig,

seaTunnelConfig,

Long.parseLong(clientCommandArgs.getRestoreJobId()));

} else {

jobExecutionEnv =

engineClient.createExecutionContext(

configFile.toString(),

clientCommandArgs.getVariables(),

jobConfig,

seaTunnelConfig,

clientCommandArgs.getCustomJobId() != null

? Long.parseLong(clientCommandArgs.getCustomJobId())

: null);

}

// get job start time

startTime = LocalDateTime.now();

// create job proxy

// 提交任务

ClientJobProxy clientJobProxy = jobExecutionEnv.execute();

// 判断是否为异步提交,当异步提交时会直接退出,不进行状态检查

if (clientCommandArgs.isAsync()) {

if (clientCommandArgs.getMasterType().equals(MasterType.LOCAL)) {

log.warn("The job is running in local mode, can not use async mode.");

} else {

return;

}

}

// register cancelJob hook

// 添加hook方法, 当提交完成任务后, 命令行退出时, 取消刚刚提交的任务

Runtime.getRuntime()

.addShutdownHook(

new Thread(

() -> {

CompletableFuture<Void> future =

CompletableFuture.runAsync(

() -> {

log.info(

"run shutdown hook because get close signal");

shutdownHook(clientJobProxy);

});

try {

future.get(15, TimeUnit.SECONDS);

} catch (Exception e) {

log.error("Cancel job failed.", e);

}

}));

// 同步,检查任务状态相关代码

// 获取任务id, 然后启动后台线程定时检查任务状态

long jobId = clientJobProxy.getJobId();

JobMetricsRunner jobMetricsRunner = new JobMetricsRunner(engineClient, jobId);

// 创建线程,定时检查状态

executorService =

Executors.newSingleThreadScheduledExecutor(

new ThreadFactoryBuilder()

.setNameFormat("job-metrics-runner-%d")

.setDaemon(true)

.build());

executorService.scheduleAtFixedRate(

jobMetricsRunner,

0,

seaTunnelConfig.getEngineConfig().getPrintJobMetricsInfoInterval(),

TimeUnit.SECONDS);

// wait for job complete

// 等待任务结束, 检查任务状态,当任务为异常退出时, 抛出异常

JobResult jobResult = clientJobProxy.waitForJobCompleteV2();

jobStatus = jobResult.getStatus();

if (StringUtils.isNotEmpty(jobResult.getError())

|| jobResult.getStatus().equals(JobStatus.FAILED)) {

throw new SeaTunnelEngineException(jobResult.getError());

}

// get job end time

endTime = LocalDateTime.now();

// get job statistic information when job finished

jobMetricsSummary = engineClient.getJobMetricsSummary(jobId);

|

下面我们就看下jobExecutionEnv这个类的初始化与execute方法

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

|

public ClientJobExecutionEnvironment(

JobConfig jobConfig,

String jobFilePath,

List<String> variables,

SeaTunnelHazelcastClient seaTunnelHazelcastClient,

SeaTunnelConfig seaTunnelConfig,

boolean isStartWithSavePoint,

Long jobId) {

super(jobConfig, isStartWithSavePoint);

this.jobFilePath = jobFilePath;

this.variables = variables;

this.seaTunnelHazelcastClient = seaTunnelHazelcastClient;

this.jobClient = new JobClient(seaTunnelHazelcastClient);

this.seaTunnelConfig = seaTunnelConfig;

Long finalJobId;

if (isStartWithSavePoint || jobId != null) {

finalJobId = jobId;

} else {

finalJobId = jobClient.getNewJobId();

}

this.jobConfig.setJobContext(new JobContext(finalJobId));

this.connectorPackageClient = new ConnectorPackageClient(seaTunnelHazelcastClient);

}

|

这个类的初始化中,很简单,只是变量赋值操作,没有做其他初始化操作。再来看下execute方法

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

|

public ClientJobProxy execute() throws ExecutionException, InterruptedException {

LogicalDag logicalDag = getLogicalDag();

log.info(

"jarUrls is : [{}]",

jarUrls.stream().map(URL::getPath).collect(Collectors.joining(", ")));

JobImmutableInformation jobImmutableInformation =

new JobImmutableInformation(

Long.parseLong(jobConfig.getJobContext().getJobId()),

jobConfig.getName(),

isStartWithSavePoint,

seaTunnelHazelcastClient.getSerializationService().toData(logicalDag),

jobConfig,

new ArrayList<>(jarUrls),

new ArrayList<>(connectorJarIdentifiers));

return jobClient.createJobProxy(jobImmutableInformation);

}

|

这个方法中,先调用getLogicalDag生产了逻辑计划,然后构建JobImmutableInformation 信息,传递给jobClient,我们先看后面的步骤,等会再看如何生成的逻辑计划。

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

|

public ClientJobProxy createJobProxy(@NonNull JobImmutableInformation jobImmutableInformation) {

return new ClientJobProxy(hazelcastClient, jobImmutableInformation);

}

public ClientJobProxy(

@NonNull SeaTunnelHazelcastClient seaTunnelHazelcastClient,

@NonNull JobImmutableInformation jobImmutableInformation) {

this.seaTunnelHazelcastClient = seaTunnelHazelcastClient;

this.jobId = jobImmutableInformation.getJobId();

submitJob(jobImmutableInformation);

}

private void submitJob(JobImmutableInformation jobImmutableInformation) {

LOGGER.info(

String.format(

"Start submit job, job id: %s, with plugin jar %s",

jobImmutableInformation.getJobId(),

jobImmutableInformation.getPluginJarsUrls()));

ClientMessage request =

SeaTunnelSubmitJobCodec.encodeRequest(

jobImmutableInformation.getJobId(),

seaTunnelHazelcastClient

.getSerializationService()

.toData(jobImmutableInformation),

jobImmutableInformation.isStartWithSavePoint());

PassiveCompletableFuture<Void> submitJobFuture =

seaTunnelHazelcastClient.requestOnMasterAndGetCompletableFuture(request);

submitJobFuture.join();

LOGGER.info(

String.format(

"Submit job finished, job id: %s, job name: %s",

jobImmutableInformation.getJobId(), jobImmutableInformation.getJobName()));

}

|

在这里的代码可以看到,生成JobImmutableInformation后,会将这个信息转换为ClientMessage(SeaTunnelSubmitJobCodec)然后发送给Master节点,也就是hazelcast server中的master节点。提交完成之后又回到上面的任务状态检测相关步骤。

这里的消息发送是调用了hazelcast的相关方法,我们对其的实现不需要关注。

逻辑计划解析

下面一章会再回到Server端看下当收到client端发送的提交任务后的处理逻辑,这里我们先回到前面,看下在客户端如何生成的逻辑计划。

1

|

LogicalDag logicalDag = getLogicalDag();

|

先看下LogicalDag的结构

1

2

3

4

5

|

@Getter private JobConfig jobConfig;

private final Set<LogicalEdge> edges = new LinkedHashSet<>();

private final Map<Long, LogicalVertex> logicalVertexMap = new LinkedHashMap<>();

private IdGenerator idGenerator;

private boolean isStartWithSavePoint = false;

|

在这个类里有这几个变量,有两个比较关键的类LogicalEdge和LogicalVertex,通过任务之间的关联关联构建出DAG。

LogicalEdge的类中存储的变量很简单, 存储了两个点的关系。

1

2

3

4

5

6

7

8

9

|

/** The input vertex connected to this edge. */

private LogicalVertex inputVertex;

/** The target vertex connected to this edge. */

private LogicalVertex targetVertex;

private Long inputVertexId;

private Long targetVertexId;

|

LogicalVertex的变量为这几个变量,有当前点的编号,以及所需的并行度,以及Action接口, Action接口会有SourceAction,SinkAction,TransformAction等不同的实现类。

1

2

3

4

5

|

private Long vertexId;

private Action action;

/** Number of subtasks to split this task into at runtime. */

private int parallelism;

|

看下getLogicalDag的方法

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

|

public LogicalDag getLogicalDag() {

//

ImmutablePair<List<Action>, Set<URL>> immutablePair = getJobConfigParser().parse(null);

actions.addAll(immutablePair.getLeft());

// seatunnel有个功能是不需要服务端所有节点有全部的依赖,可以在客户端中将所需依赖上传到服务端

// 这里的if-else是这个功能的一些逻辑判断,判断是否需要从客户端将jar包上传到服务端,从而服务端不需要维护全部的jar包

boolean enableUploadConnectorJarPackage =

seaTunnelConfig.getEngineConfig().getConnectorJarStorageConfig().getEnable();

if (enableUploadConnectorJarPackage) {

Set<ConnectorJarIdentifier> commonJarIdentifiers =

connectorPackageClient.uploadCommonPluginJars(

Long.parseLong(jobConfig.getJobContext().getJobId()), commonPluginJars);

Set<URL> commonPluginJarUrls = getJarUrlsFromIdentifiers(commonJarIdentifiers);

Set<ConnectorJarIdentifier> pluginJarIdentifiers = new HashSet<>();

uploadActionPluginJar(actions, pluginJarIdentifiers);

Set<URL> connectorPluginJarUrls = getJarUrlsFromIdentifiers(pluginJarIdentifiers);

connectorJarIdentifiers.addAll(commonJarIdentifiers);

connectorJarIdentifiers.addAll(pluginJarIdentifiers);

jarUrls.addAll(commonPluginJarUrls);

jarUrls.addAll(connectorPluginJarUrls);

actions.forEach(

action -> {

addCommonPluginJarsToAction(

action, commonPluginJarUrls, commonJarIdentifiers);

});

} else {

jarUrls.addAll(commonPluginJars);

jarUrls.addAll(immutablePair.getRight());

actions.forEach(

action -> {

addCommonPluginJarsToAction(

action, new HashSet<>(commonPluginJars), Collections.emptySet());

});

}

return getLogicalDagGenerator().generate();

}

|

方法中首先调用了.parse(null)方法,此方法的返回值是一个不可变二元组,第一个值为List<Action>对象,getJobConfigParser返回的对象是MultipleTableJobConfigParser

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

|

public MultipleTableJobConfigParser(

Config seaTunnelJobConfig,

IdGenerator idGenerator,

JobConfig jobConfig,

List<URL> commonPluginJars,

boolean isStartWithSavePoint) {

this.idGenerator = idGenerator;

this.jobConfig = jobConfig;

this.commonPluginJars = commonPluginJars;

this.isStartWithSavePoint = isStartWithSavePoint;

this.seaTunnelJobConfig = seaTunnelJobConfig;

this.envOptions = ReadonlyConfig.fromConfig(seaTunnelJobConfig.getConfig("env"));

this.fallbackParser =

new JobConfigParser(idGenerator, commonPluginJars, this, isStartWithSavePoint);

}

|

当调用parse(null)方法时,会进行解析

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

|

public ImmutablePair<List<Action>, Set<URL>> parse(ClassLoaderService classLoaderService) {

// 将配置文件中的 env.jars添加到 commonJars中

this.fillJobConfigAndCommonJars();

// 从配置文件中,将source,transform,sink的配置分别读取处理

List<? extends Config> sourceConfigs =

TypesafeConfigUtils.getConfigList(

seaTunnelJobConfig, "source", Collections.emptyList());

List<? extends Config> transformConfigs =

TypesafeConfigUtils.getConfigList(

seaTunnelJobConfig, "transform", Collections.emptyList());

List<? extends Config> sinkConfigs =

TypesafeConfigUtils.getConfigList(

seaTunnelJobConfig, "sink", Collections.emptyList());

// 获取连接器的jar包地址

List<URL> connectorJars = getConnectorJarList(sourceConfigs, sinkConfigs);

if (!commonPluginJars.isEmpty()) {

// 将commonJars添加到连接器的jars中

connectorJars.addAll(commonPluginJars);

}

ClassLoader parentClassLoader = Thread.currentThread().getContextClassLoader();

ClassLoader classLoader;

if (classLoaderService == null) {

// 由于我们刚才传递了null,所以这里会创建SeaTunnelChildFirstClassLoader类加载器

// 从名字也能看出,这里会与默认的加载器不同,不会先调用父类进行加载,

// 而是自己找不到之后再调用父类进行加载,避免jar包冲突

classLoader = new SeaTunnelChildFirstClassLoader(connectorJars, parentClassLoader);

} else {

classLoader =

classLoaderService.getClassLoader(

Long.parseLong(jobConfig.getJobContext().getJobId()), connectorJars);

}

try {

Thread.currentThread().setContextClassLoader(classLoader);

// 检查DAG里面是否构成环,避免后续的构建过程陷入循环

ConfigParserUtil.checkGraph(sourceConfigs, transformConfigs, sinkConfigs);

LinkedHashMap<String, List<Tuple2<CatalogTable, Action>>> tableWithActionMap =

new LinkedHashMap<>();

log.info("start generating all sources.");

for (int configIndex = 0; configIndex < sourceConfigs.size(); configIndex++) {

Config sourceConfig = sourceConfigs.get(configIndex);

// parseSource方法为真正生成source的方法

// 返回值为2元组,第一个值为 当前source生成的表名称

// 第二个值为 CatalogTable和Action的二元组列表

// 由于SeaTunnel Source支持读取多表,所以第二个值为列表

Tuple2<String, List<Tuple2<CatalogTable, Action>>> tuple2 =

parseSource(configIndex, sourceConfig, classLoader);

tableWithActionMap.put(tuple2._1(), tuple2._2());

}

log.info("start generating all transforms.");

// parseTransforms来生成transform

// 这里将上面的 tableWithActionMap传递了进去,所以不需要返回值

parseTransforms(transformConfigs, classLoader, tableWithActionMap);

log.info("start generating all sinks.");

List<Action> sinkActions = new ArrayList<>();

for (int configIndex = 0; configIndex < sinkConfigs.size(); configIndex++) {

Config sinkConfig = sinkConfigs.get(configIndex);

// parseSink方法来生成sink

// 同样,传递了tableWithActionMap

sinkActions.addAll(

parseSink(configIndex, sinkConfig, classLoader, tableWithActionMap));

}

Set<URL> factoryUrls = getUsedFactoryUrls(sinkActions);

return new ImmutablePair<>(sinkActions, factoryUrls);

} finally {

// 将当前线程的类加载器切换为原来的类加载器

Thread.currentThread().setContextClassLoader(parentClassLoader);

if (classLoaderService != null) {

classLoaderService.releaseClassLoader(

Long.parseLong(jobConfig.getJobContext().getJobId()), connectorJars);

}

}

}

|

解析Source

先来看下parseSource方法

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

|

public Tuple2<String, List<Tuple2<CatalogTable, Action>>> parseSource(

int configIndex, Config sourceConfig, ClassLoader classLoader) {

final ReadonlyConfig readonlyConfig = ReadonlyConfig.fromConfig(sourceConfig);

// factoryId就是我们配置里面的 source名称,例如 FakeSource, Jdbc

final String factoryId = getFactoryId(readonlyConfig);

// 获取当前数据源生成的 表 名称,注意这里的表可能并不对应一个表

// 由于 seatunnel source支持多表读取,那么这里就会出现一对多的关系

final String tableId =

readonlyConfig.getOptional(CommonOptions.RESULT_TABLE_NAME).orElse(DEFAULT_ID);

// 获取并行度

final int parallelism = getParallelism(readonlyConfig);

// 这个地方是由于某些Source还不支持通过Factory工厂来构建,所以会有两种构建方法

// 后续当所有连接器都支持通过工厂来创建后,这里的代码会被删除掉,所以这次忽略掉这部分代码

// 方法内部是查询是否有相应的工厂类,相应的工厂类不存在时返回 true,不存在时返回false

boolean fallback =

isFallback(

classLoader,

TableSourceFactory.class,

factoryId,

(factory) -> factory.createSource(null));

if (fallback) {

Tuple2<CatalogTable, Action> tuple =

fallbackParser.parseSource(sourceConfig, jobConfig, tableId, parallelism);

return new Tuple2<>(tableId, Collections.singletonList(tuple));

}

// 通过FactoryUtil来创建Source

// 返回对象为 SeaTunnelSource实例,以及List<CatalogTable>

// 这里会创建我们同步任务中Source的实例,catalogtable列表表示这个数据源读取的表的表结构等信息

Tuple2<SeaTunnelSource<Object, SourceSplit, Serializable>, List<CatalogTable>> tuple2 =

FactoryUtil.createAndPrepareSource(readonlyConfig, classLoader, factoryId);

// 获取当前source connector的jar包

Set<URL> factoryUrls = new HashSet<>();

factoryUrls.addAll(getSourcePluginJarPaths(sourceConfig));

List<Tuple2<CatalogTable, Action>> actions = new ArrayList<>();

long id = idGenerator.getNextId();

String actionName = JobConfigParser.createSourceActionName(configIndex, factoryId);

SeaTunnelSource<Object, SourceSplit, Serializable> source = tuple2._1();

source.setJobContext(jobConfig.getJobContext());

PluginUtil.ensureJobModeMatch(jobConfig.getJobContext(), source);

// 构建 SourceAction

SourceAction<Object, SourceSplit, Serializable> action =

new SourceAction<>(id, actionName, tuple2._1(), factoryUrls, new HashSet<>());

action.setParallelism(parallelism);

for (CatalogTable catalogTable : tuple2._2()) {

actions.add(new Tuple2<>(catalogTable, action));

}

return new Tuple2<>(tableId, actions);

}

|

看一下新版本中是如何通过工厂来创建Source实例的

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

|

public static <T, SplitT extends SourceSplit, StateT extends Serializable>

Tuple2<SeaTunnelSource<T, SplitT, StateT>, List<CatalogTable>> createAndPrepareSource(

ReadonlyConfig options, ClassLoader classLoader, String factoryIdentifier) {

try {

// 通过SPI加载TableSourceFactory的类,然后根据factoryIdentifier找对应的类

// 即 找到 souce对应的 SourceFactory

final TableSourceFactory factory =

discoverFactory(classLoader, TableSourceFactory.class, factoryIdentifier);

// 通过Factory来创建Source实例,这个Source实例就是你任务中对应类型的Source

// 也就是说Source类的初始化会在Client端创建一次,需要注意这里的环境是否能够连接到该Source

SeaTunnelSource<T, SplitT, StateT> source =

createAndPrepareSource(factory, options, classLoader);

List<CatalogTable> catalogTables;

try {

// 获取 source会产生的表 列表。包含了字段,数据类型,分区信息等

catalogTables = source.getProducedCatalogTables();

} catch (UnsupportedOperationException e) {

// 为了兼容有些Connector未实现getProducedCatalogTables方法

// 调用老的获取数据类型的方法,并转换为Catalog

SeaTunnelDataType<T> seaTunnelDataType = source.getProducedType();

final String tableId =

options.getOptional(CommonOptions.RESULT_TABLE_NAME).orElse(DEFAULT_ID);

catalogTables =

CatalogTableUtil.convertDataTypeToCatalogTables(seaTunnelDataType, tableId);

}

LOG.info(

"get the CatalogTable from source {}: {}",

source.getPluginName(),

catalogTables.stream()

.map(CatalogTable::getTableId)

.map(TableIdentifier::toString)

.collect(Collectors.joining(",")));

// 这个代码已经过时

if (options.get(SourceOptions.DAG_PARSING_MODE) == ParsingMode.SHARDING) {

CatalogTable catalogTable = catalogTables.get(0);

catalogTables.clear();

catalogTables.add(catalogTable);

}

return new Tuple2<>(source, catalogTables);

} catch (Throwable t) {

throw new FactoryException(

String.format(

"Unable to create a source for identifier '%s'.", factoryIdentifier),

t);

}

}

private static <T, SplitT extends SourceSplit, StateT extends Serializable>

SeaTunnelSource<T, SplitT, StateT> createAndPrepareSource(

TableSourceFactory factory, ReadonlyConfig options, ClassLoader classLoader) {

// 通过TableSourceFactory来创建Source

TableSourceFactoryContext context = new TableSourceFactoryContext(options, classLoader);

ConfigValidator.of(context.getOptions()).validate(factory.optionRule());

TableSource<T, SplitT, StateT> tableSource = factory.createSource(context);

return tableSource.createSource();

}

|

在客户端就会通过SPI加载到Source相应的Factory然后创建出对应的Source实例出来,所以这里需要保证提交的客户端也能够与Source/Sink端建立连接,避免网络连不通的问题。

接下来在看一下如何创建Transform

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

|

public void parseTransforms(

List<? extends Config> transformConfigs,

ClassLoader classLoader,

LinkedHashMap<String, List<Tuple2<CatalogTable, Action>>> tableWithActionMap) {

if (CollectionUtils.isEmpty(transformConfigs) || transformConfigs.isEmpty()) {

return;

}

Queue<Config> configList = new LinkedList<>(transformConfigs);

int index = 0;

while (!configList.isEmpty()) {

parseTransform(index++, configList, classLoader, tableWithActionMap);

}

}

private void parseTransform(

int index,

Queue<Config> transforms,

ClassLoader classLoader,

LinkedHashMap<String, List<Tuple2<CatalogTable, Action>>> tableWithActionMap) {

Config config = transforms.poll();

final ReadonlyConfig readonlyConfig = ReadonlyConfig.fromConfig(config);

final String factoryId = getFactoryId(readonlyConfig);

// get jar urls

Set<URL> jarUrls = new HashSet<>();

jarUrls.addAll(getTransformPluginJarPaths(config));

final List<String> inputIds = getInputIds(readonlyConfig);

// inputIds为source_table_name,根据这个值找到所依赖的上游source

// 目前Transform不支持对多表进行处理,所以如果所依赖的上游是多表,会抛出异常

List<Tuple2<CatalogTable, Action>> inputs =

inputIds.stream()

.map(tableWithActionMap::get)

.filter(Objects::nonNull)

.peek(

input -> {

if (input.size() > 1) {

throw new JobDefineCheckException(

"Adding transform to multi-table source is not supported.");

}

})

.flatMap(Collection::stream)

.collect(Collectors.toList());

// inputs为空,表明当前Transform节点找不到任何上游的节点

// 此时会有几种情况

if (inputs.isEmpty()) {

if (transforms.isEmpty()) {

// 未设置source_table_name,设置结果与之前不对应并且只有一个transform时

// 把最后一个source作为这个transform的上游表

inputs = findLast(tableWithActionMap);

} else {

// 所依赖的transform可能还没有创建,将本次的transform再放回队列中,后续再进行解析

transforms.offer(config);

return;

}

}

// 这次transform结果产生的表名称

final String tableId =

readonlyConfig.getOptional(CommonOptions.RESULT_TABLE_NAME).orElse(DEFAULT_ID);

// 获取上游source的Action

Set<Action> inputActions =

inputs.stream()

.map(Tuple2::_2)

.collect(Collectors.toCollection(LinkedHashSet::new));

// 验证所依赖的多个上游,是否产生的表结构都相同,只有所有的表结构都相同才能进入一个transform来处理

checkProducedTypeEquals(inputActions);

// 设置并行度

int spareParallelism = inputs.get(0)._2().getParallelism();

int parallelism =

readonlyConfig.getOptional(CommonOptions.PARALLELISM).orElse(spareParallelism);

// 创建Transform实例,与刚刚通过Source工厂来创建差不多的行为

CatalogTable catalogTable = inputs.get(0)._1();

SeaTunnelTransform<?> transform =

FactoryUtil.createAndPrepareTransform(

catalogTable, readonlyConfig, classLoader, factoryId);

transform.setJobContext(jobConfig.getJobContext());

long id = idGenerator.getNextId();

String actionName = JobConfigParser.createTransformActionName(index, factoryId);

// 封装成Action

TransformAction transformAction =

new TransformAction(

id,

actionName,

new ArrayList<>(inputActions),

transform,

jarUrls,

new HashSet<>());

transformAction.setParallelism(parallelism);

// 放入到map中,此时map里面存储了source和transform

// 以每个节点产生的表结构为key,action作为value

tableWithActionMap.put(

tableId,

Collections.singletonList(

new Tuple2<>(transform.getProducedCatalogTable(), transformAction)));

}

|

解析Sink

当看完source/transform的解析之后,对于sink的解析逻辑也会比较明了

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

|

public List<SinkAction<?, ?, ?, ?>> parseSink(

int configIndex,

Config sinkConfig,

ClassLoader classLoader,

LinkedHashMap<String, List<Tuple2<CatalogTable, Action>>> tableWithActionMap) {

ReadonlyConfig readonlyConfig = ReadonlyConfig.fromConfig(sinkConfig);

//

String factoryId = getFactoryId(readonlyConfig);

// 获取当前sink节点依赖的上游节点

List<String> inputIds = getInputIds(readonlyConfig);

// 在tableWithActionMap中查找

List<List<Tuple2<CatalogTable, Action>>> inputVertices =

inputIds.stream()

.map(tableWithActionMap::get)

.filter(Objects::nonNull)

.collect(Collectors.toList());

// 当sink节点找不到上游节点时,找到最后一个节点信息作为上游节点

// 这里与transform不一样的地方是,不会再等其他sink节点初始化完成,因为sink节点不可能依赖与其他sink节点

if (inputVertices.isEmpty()) {

// Tolerates incorrect configuration of simple graph

inputVertices = Collections.singletonList(findLast(tableWithActionMap));

} else if (inputVertices.size() > 1) {

for (List<Tuple2<CatalogTable, Action>> inputVertex : inputVertices) {

if (inputVertex.size() > 1) {

// 当一个sink节点即有多个上游节点,且某个上游节点还会产生多表时抛出异常

// sink可以支持多个数据源,或者单个数据源下产生多表,不能同时支持多个数据源,且某个数据源下存在多表

throw new JobDefineCheckException(

"Sink don't support simultaneous writing of data from multi-table source and other sources.");

}

}

}

// 与解析source一样,对老代码的兼容

boolean fallback =

isFallback(

classLoader,

TableSinkFactory.class,

factoryId,

(factory) -> factory.createSink(null));

if (fallback) {

return fallbackParser.parseSinks(configIndex, inputVertices, sinkConfig, jobConfig);

}

// 获取sink的连接器jar包

Set<URL> jarUrls = new HashSet<>();

jarUrls.addAll(getSinkPluginJarPaths(sinkConfig));

List<SinkAction<?, ?, ?, ?>> sinkActions = new ArrayList<>();

// 多个数据源的情况

if (inputVertices.size() > 1) {

Set<Action> inputActions =

inputVertices.stream()

.flatMap(Collection::stream)

.map(Tuple2::_2)

.collect(Collectors.toCollection(LinkedHashSet::new));

// 检查多个上游数据源产生的表结构是否一致

checkProducedTypeEquals(inputActions);

// 创建sinkAction

Tuple2<CatalogTable, Action> inputActionSample = inputVertices.get(0).get(0);

SinkAction<?, ?, ?, ?> sinkAction =

createSinkAction(

inputActionSample._1(),

inputActions,

readonlyConfig,

classLoader,

jarUrls,

new HashSet<>(),

factoryId,

inputActionSample._2().getParallelism(),

configIndex);

sinkActions.add(sinkAction);

return sinkActions;

}

// 此时只有一个数据源,且此数据源下可能会产生多表,循环创建sinkAction

for (Tuple2<CatalogTable, Action> tuple : inputVertices.get(0)) {

SinkAction<?, ?, ?, ?> sinkAction =

createSinkAction(

tuple._1(),

Collections.singleton(tuple._2()),

readonlyConfig,

classLoader,

jarUrls,

new HashSet<>(),

factoryId,

tuple._2().getParallelism(),

configIndex);

sinkActions.add(sinkAction);

}

// 当一个数据源下多表时与多个数据源 会多进行这么这一步

// 上面的createSinkAction是一致的

// 此方法内会判断sink是否支持多表,以及

Optional<SinkAction<?, ?, ?, ?>> multiTableSink =

tryGenerateMultiTableSink(

sinkActions, readonlyConfig, classLoader, factoryId, configIndex);

// 最终会将所创建的sink action作为返回值返回

return multiTableSink

.<List<SinkAction<?, ?, ?, ?>>>map(Collections::singletonList)

.orElse(sinkActions);

}

|

接下来看下创建sinkAction方法

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

|

private SinkAction<?, ?, ?, ?> createSinkAction(

CatalogTable catalogTable,

Set<Action> inputActions,

ReadonlyConfig readonlyConfig,

ClassLoader classLoader,

Set<URL> factoryUrls,

Set<ConnectorJarIdentifier> connectorJarIdentifiers,

String factoryId,

int parallelism,

int configIndex) {

// 使用工厂类创建sink

SeaTunnelSink<?, ?, ?, ?> sink =

FactoryUtil.createAndPrepareSink(

catalogTable, readonlyConfig, classLoader, factoryId);

sink.setJobContext(jobConfig.getJobContext());

SinkConfig actionConfig =

new SinkConfig(catalogTable.getTableId().toTablePath().toString());

long id = idGenerator.getNextId();

String actionName =

JobConfigParser.createSinkActionName(

configIndex, factoryId, actionConfig.getMultipleRowTableId());

// 创建sinkAction

SinkAction<?, ?, ?, ?> sinkAction =

new SinkAction<>(

id,

actionName,

new ArrayList<>(inputActions),

sink,

factoryUrls,

connectorJarIdentifiers,

actionConfig);

if (!isStartWithSavePoint) {

// 这里需要注意,当非从savepoint启动时,会进行savemode的处理

handleSaveMode(sink);

}

sinkAction.setParallelism(parallelism);

return sinkAction;

}

public void handleSaveMode(SeaTunnelSink<?, ?, ?, ?> sink) {

// 当sink类支持了savemode特性时,会进行savemode处理

// 例如删除表,重建表,报错等

if (SupportSaveMode.class.isAssignableFrom(sink.getClass())) {

SupportSaveMode saveModeSink = (SupportSaveMode) sink;

// 当 设置savemode在client端执行时,会在client端去做这些事

// 我们之前出现过一个错误是当在客户端执行完毕后,到集群后任务执行出错,卡在scheduling的状态

// 导致数据被清空后没有及时写入

// 以及需要注意这个地方执行的机器到sink集群的网络是否能够连通,推荐将这个行为放到server端执行

if (envOptions

.get(EnvCommonOptions.SAVEMODE_EXECUTE_LOCATION)

.equals(SaveModeExecuteLocation.CLIENT)) {

log.warn(

"SaveMode execute location on CLIENT is deprecated, please use CLUSTER instead.");

Optional<SaveModeHandler> saveModeHandler = saveModeSink.getSaveModeHandler();

if (saveModeHandler.isPresent()) {

try (SaveModeHandler handler = saveModeHandler.get()) {

new SaveModeExecuteWrapper(handler).execute();

} catch (Exception e) {

throw new SeaTunnelRuntimeException(HANDLE_SAVE_MODE_FAILED, e);

}

}

}

}

}

|

我们看完了如何去解析Source/Transform/Sink的逻辑,再回到调用的地方

1

2

3

4

5

6

7

8

9

10

|

List<Action> sinkActions = new ArrayList<>();

for (int configIndex = 0; configIndex < sinkConfigs.size(); configIndex++) {

Config sinkConfig = sinkConfigs.get(configIndex);

// parseSink方法来生成sink

// 同样,传递了tableWithActionMap

sinkActions.addAll(

parseSink(configIndex, sinkConfig, classLoader, tableWithActionMap));

}

Set<URL> factoryUrls = getUsedFactoryUrls(sinkActions);

return new ImmutablePair<>(sinkActions, factoryUrls);

|

parseSink会返回所有创建的Sink Action,而每个Action都维护了upstream Action,所以我们能通过最终的Sink Action找到相关联的Transform Action和Source Action

最终调用getUsedFactoryUrls或找到此链路上的所有依赖的Jar

然后返回一个二元组

逻辑计划解析

再回到逻辑计划生成的部分

1

2

3

4

5

6

7

|

public LogicalDag getLogicalDag() {

//

ImmutablePair<List<Action>, Set<URL>> immutablePair = getJobConfigParser().parse(null);

actions.addAll(immutablePair.getLeft());

....

return getLogicalDagGenerator().generate();

}

|

在上面看完了如何去解析配置,接下来看下如何去生成逻辑计划

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

|

// 初始化将我们生成的所有SinkAction传入

protected LogicalDagGenerator getLogicalDagGenerator() {

return new LogicalDagGenerator(actions, jobConfig, idGenerator, isStartWithSavePoint);

}

public LogicalDag generate() {

// 根据action来生成节点信息

actions.forEach(this::createLogicalVertex);

// 创建边

Set<LogicalEdge> logicalEdges = createLogicalEdges();

// 构建LogicalDag对象,并将解析的值设置到相应属性中

LogicalDag logicalDag = new LogicalDag(jobConfig, idGenerator);

logicalDag.getEdges().addAll(logicalEdges);

logicalDag.getLogicalVertexMap().putAll(logicalVertexMap);

logicalDag.setStartWithSavePoint(isStartWithSavePoint);

return logicalDag;

}

|

创建逻辑计划节点

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

|

private void createLogicalVertex(Action action) {

// 获取当前action的id,判断当map中已经存在则返回

final Long logicalVertexId = action.getId();

if (logicalVertexMap.containsKey(logicalVertexId)) {

return;

}

// 对上游的依赖进行循环创建

// map对象的存储结构为:

// 当前节点的id为key

// value为一个list,存储下游使用到该节点的id编号

action.getUpstream()

.forEach(

inputAction -> {

createLogicalVertex(inputAction);

inputVerticesMap

.computeIfAbsent(

inputAction.getId(), id -> new LinkedHashSet<>())

.add(logicalVertexId);

});

// 最后创建当前节点的信息

final LogicalVertex logicalVertex =

new LogicalVertex(logicalVertexId, action, action.getParallelism());

// 注意这里有两个map

// 一个为inputVerticesMap,一个为logicalVertexMap

// inputVerticesMap中存储了节点之间的关系

// logicalVertexMap存储了节点编号与节点的关系

logicalVertexMap.put(logicalVertexId, logicalVertex);

}

private Set<LogicalEdge> createLogicalEdges() {

// 使用上面创建的两个map来创建边

return inputVerticesMap.entrySet().stream()

.map(

entry ->

entry.getValue().stream()

.map(

targetId ->

new LogicalEdge(

logicalVertexMap.get(

entry.getKey()),

logicalVertexMap.get(targetId)))

.collect(Collectors.toList()))

.flatMap(Collection::stream)

.collect(Collectors.toCollection(LinkedHashSet::new));

}

|

上面的配置中,会根据上下游关系生成这样的逻辑计划图,并且由于Fake2节点是没有任务下游的,并不会计入到逻辑计划中

小结一下

至此我们看完了在客户端如何完成一个任务的提交流程

小结一下:

- 首先会判断我们执行的模式,当我们是local模式时,会在本机创建一个server节点

- 然后在当前节点创建一个hazelcast节点,与hazelcast集群进行连接,连接到集群或者刚刚启动的本地节点

- 接下来判断我们这次的任务类型来调用不同的方法

- 以提交任务为例,会解析配置文件,并进行逻辑计划解析,在逻辑计划解析时,会在提交的机器上创建Source/Transform/Sink实例。并且去执行savemode功能,有可能会建表,重建表,删除数据操作(当启用客户端执行时)

- 当逻辑计划解析完成后,会将信息编码,然后通过hazelcast的集群通信功能,将信息发送给server的master节点

- 发送完成后,根据配置决定退出还是继续做任务状态的检测

- 程序添加hook配置,当客户端退出后取消刚刚提交的任务

服务端提交任务相关

我们再回顾一下当服务端启动后会执行的组件:

- coordinatorService

仅在master/standby节点启用,会监听集群状态,主备切换

- SlotService

在worker节点中启用,会定期上报自身信息到master中

- TaskExecutionSerive

在worker节点中启用,会定时更新执行的任务指标到IMAP中

在集群未接收到任何任务时,会运行这些组件,当Client发送一条SeaTunnelSubmitJobCodec信息到服务端后,服务端又是如何处理的呢?

接收消息

因为客户端与服务端在不同的机器上,所有这里无法使用方法调用,而是使用了消息传递,当服务端接收到一条消息后是如何进行相关的方法调用的呢

首先我们在上面的代码中,知道客户端向服务端发送的是一条类型为SeaTunnelSubmitJobCodec的消息

1

2

3

4

5

6

7

8

9

10

|

// 客户端相关代码

ClientMessage request =

SeaTunnelSubmitJobCodec.encodeRequest(

jobImmutableInformation.getJobId(),

seaTunnelHazelcastClient

.getSerializationService()

.toData(jobImmutableInformation),

jobImmutableInformation.isStartWithSavePoint());

PassiveCompletableFuture<Void> submitJobFuture =

seaTunnelHazelcastClient.requestOnMasterAndGetCompletableFuture(request);

|

我们进入SeaTunnelSubmitJobCodec这个类,查看他的相关调用类,可以找到一个SeaTunnelMessageTaskFactoryProvider的类,在这个里中维护了一个消息类型到MessageTask的映射关系,也可以理解为客户端消息到服务端调用类的映射关系,以SeaTunnelSubmitJobCodec为例,会返回SubmitJobTask这个类

1

2

3

4

5

6

7

8

9

10

11

12

|

private final Int2ObjectHashMap<MessageTaskFactory> factories = new Int2ObjectHashMap<>(60);

private void initFactories() {

factories.put(

SeaTunnelPrintMessageCodec.REQUEST_MESSAGE_TYPE,

(clientMessage, connection) ->

new PrintMessageTask(clientMessage, node, connection));

factories.put(

SeaTunnelSubmitJobCodec.REQUEST_MESSAGE_TYPE,

(clientMessage, connection) -> new SubmitJobTask(clientMessage, node, connection));

.....

}

|

当我们查看SubmitJobTask这个类时,又会发现继续调用了SubmitJobOperation这个类

1

2

3

4

5

6

7

|

@Override

protected Operation prepareOperation() {

return new SubmitJobOperation(

parameters.jobId,

parameters.jobImmutableInformation,

parameters.isStartWithSavePoint);

}

|

在SubmitJobOperation中我们可以看到真正调用的地方,将我们的信息交给了CoordinatorService组件,调用了其submitJob方法

1

2

3

4

5

6

7

8

|

@Override

protected PassiveCompletableFuture<?> doRun() throws Exception {

SeaTunnelServer seaTunnelServer = getService();

return seaTunnelServer

.getCoordinatorService()

.submitJob(jobId, jobImmutableInformation, isStartWithSavePoint);

}

|

这时一个客户端的消息就真正的被交给服务端来进行方法调用了,至于其他类型的操作也都可以类似找到相关的类,就不再赘述。

CoordinatorService

接下来看下在CoordinatorService是如何进行任务提交的

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

|

public PassiveCompletableFuture<Void> submitJob(

long jobId, Data jobImmutableInformation, boolean isStartWithSavePoint) {

CompletableFuture<Void> jobSubmitFuture = new CompletableFuture<>();

// 首先会根据任务id来判断,当存在相同任务的id时,直接返回

if (getJobMaster(jobId) != null) {

logger.warning(

String.format(

"The job %s is currently running; no need to submit again.", jobId));

jobSubmitFuture.complete(null);

return new PassiveCompletableFuture<>(jobSubmitFuture);

}

// 初始化JobMaster对象

JobMaster jobMaster =

new JobMaster(

jobImmutableInformation,

this.nodeEngine,

executorService,

getResourceManager(),

getJobHistoryService(),

runningJobStateIMap,

runningJobStateTimestampsIMap,

ownedSlotProfilesIMap,

runningJobInfoIMap,

metricsImap,

engineConfig,

seaTunnelServer);

//

executorService.submit(

() -> {

try {

// 由于2.3.6中任务id可以由用户传递,而在seatunnel中会根据任务id来做一些状态判断

// 所以这里的检查是保证在当前的状态中,不会存在相同id的任务

if (!isStartWithSavePoint

&& getJobHistoryService().getJobMetrics(jobId) != null) {

throw new JobException(

String.format(

"The job id %s has already been submitted and is not starting with a savepoint.",

jobId));

}

// 将当前任务的信息添加到IMAP中

runningJobInfoIMap.put(

jobId,

new JobInfo(System.currentTimeMillis(), jobImmutableInformation));

runningJobMasterMap.put(jobId, jobMaster);

// 对JobMaster做初始化操作

jobMaster.init(

runningJobInfoIMap.get(jobId).getInitializationTimestamp(), false);

// 当jobMaster初始化完成后,会认为任务创建成功

jobSubmitFuture.complete(null);

} catch (Throwable e) {

String errorMsg = ExceptionUtils.getMessage(e);

logger.severe(String.format("submit job %s error %s ", jobId, errorMsg));

jobSubmitFuture.completeExceptionally(new JobException(errorMsg));

}

if (!jobSubmitFuture.isCompletedExceptionally()) {

// 当任务正常提交后,调用jobMaster的run方法开始执行任务

// 以及最后会检查任务状态,从内部状态中将此次任务信息删除

try {

jobMaster.run();

} finally {

// voidCompletableFuture will be cancelled when zeta master node

// shutdown to simulate master failure,

// don't update runningJobMasterMap is this case.

if (!jobMaster.getJobMasterCompleteFuture().isCancelled()) {

runningJobMasterMap.remove(jobId);

}

}

} else {

runningJobInfoIMap.remove(jobId);

runningJobMasterMap.remove(jobId);

}

});

return new PassiveCompletableFuture<>(jobSubmitFuture);

}

|

可以看到在服务端,会通过创建一个JobMaster对象,由这个对象来进行单个任务的管理。

在创建JobMaster对象时,会通过getResourceManager方法来获取资源管理对象,以及通过getJobHistoryService方法获取任务历史信息,jobHistoryService在启动时就会创建完成,ResourceManage则采用了懒加载的方式,在第一次有任务提交之后才会进行创建

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

|

/** Lazy load for resource manager */

public ResourceManager getResourceManager() {

if (resourceManager == null) {

synchronized (this) {

if (resourceManager == null) {

ResourceManager manager =

new ResourceManagerFactory(nodeEngine, engineConfig)

.getResourceManager();

manager.init();

resourceManager = manager;

}

}

}

return resourceManager;

}

|

ResourceManager

目前seatunnel也仅支持standalone的部署方式,当初始化ResourceManager时,会获取到集群所有节点,然后向其发送SyncWorkerProfileOperation操作来获取节点的信息,然后更新到内部的registerWorker状态中

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

|

@Override

public void init() {

log.info("Init ResourceManager");

initWorker();

}

private void initWorker() {

log.info("initWorker... ");

List<Address> aliveNode =

nodeEngine.getClusterService().getMembers().stream()

.map(Member::getAddress)

.collect(Collectors.toList());

log.info("init live nodes: {}", aliveNode);

List<CompletableFuture<Void>> futures =

aliveNode.stream()

.map(

node ->

sendToMember(new SyncWorkerProfileOperation(), node)

.thenAccept(

p -> {

if (p != null) {

registerWorker.put(

node, (WorkerProfile) p);

log.info(

"received new worker register: "

+ ((WorkerProfile)

p)

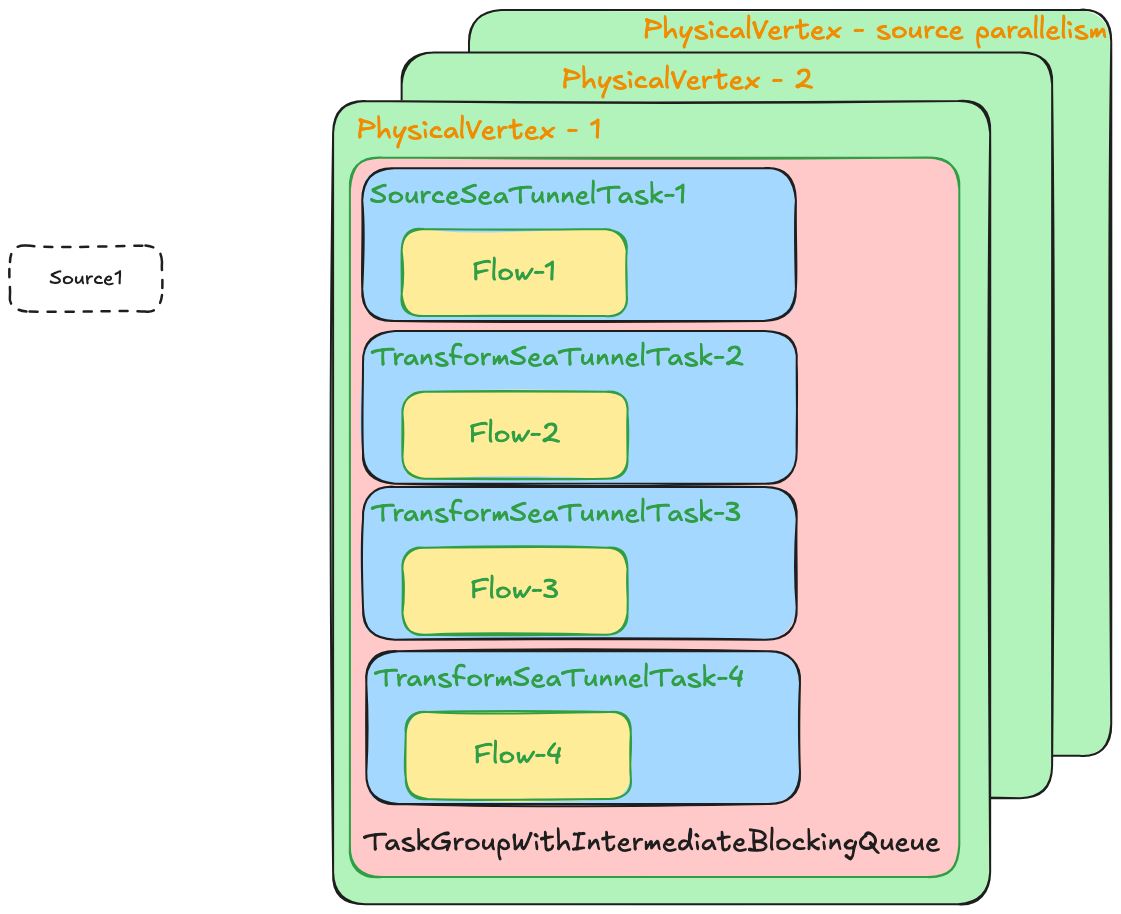

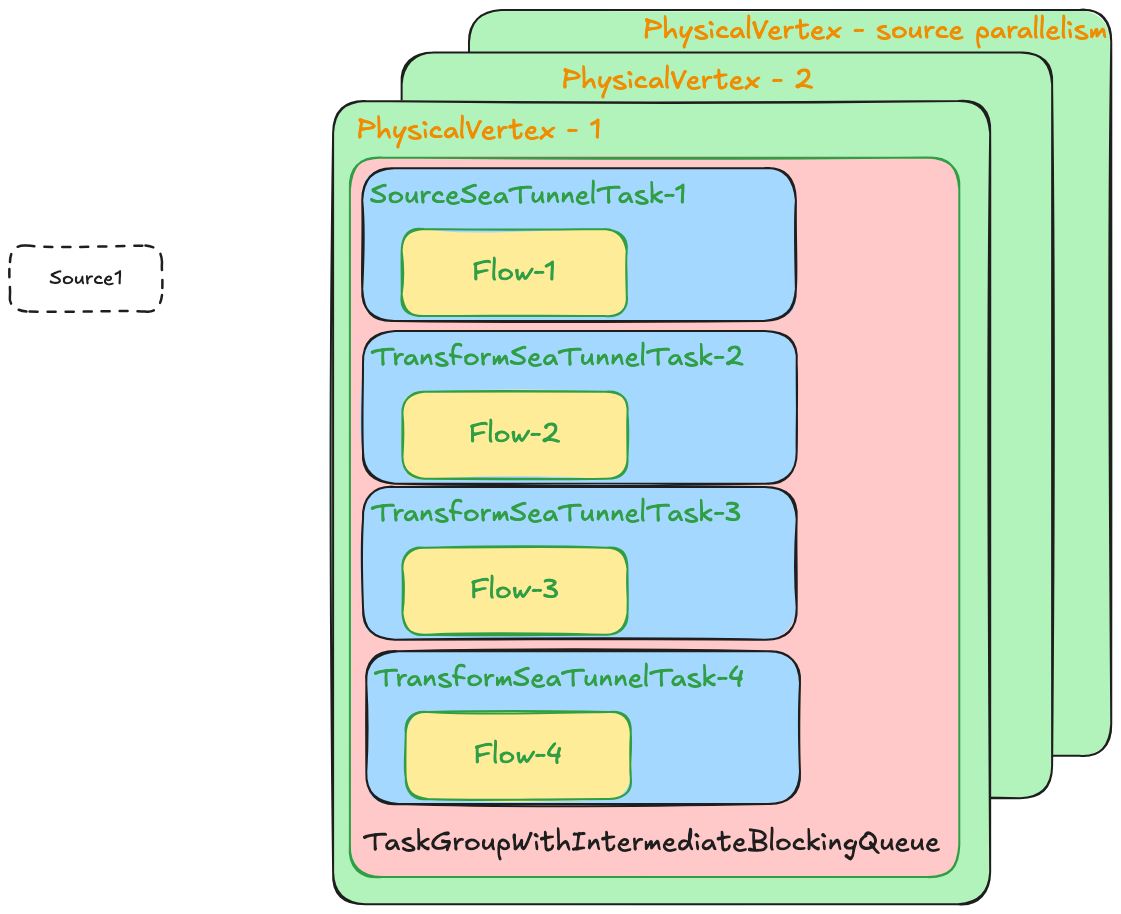

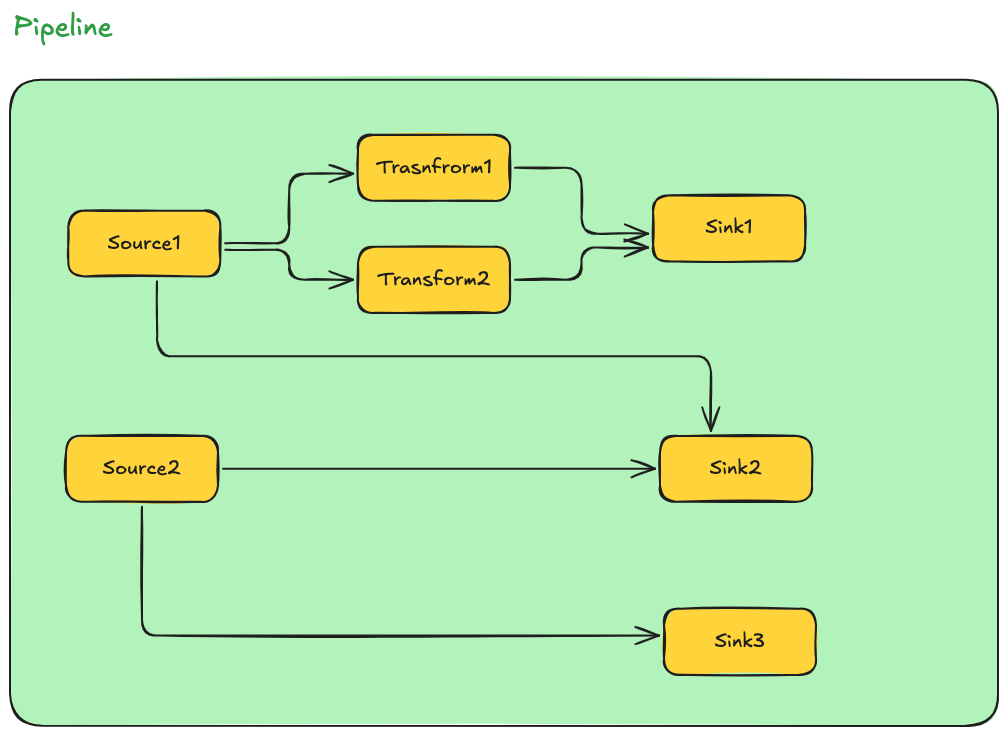

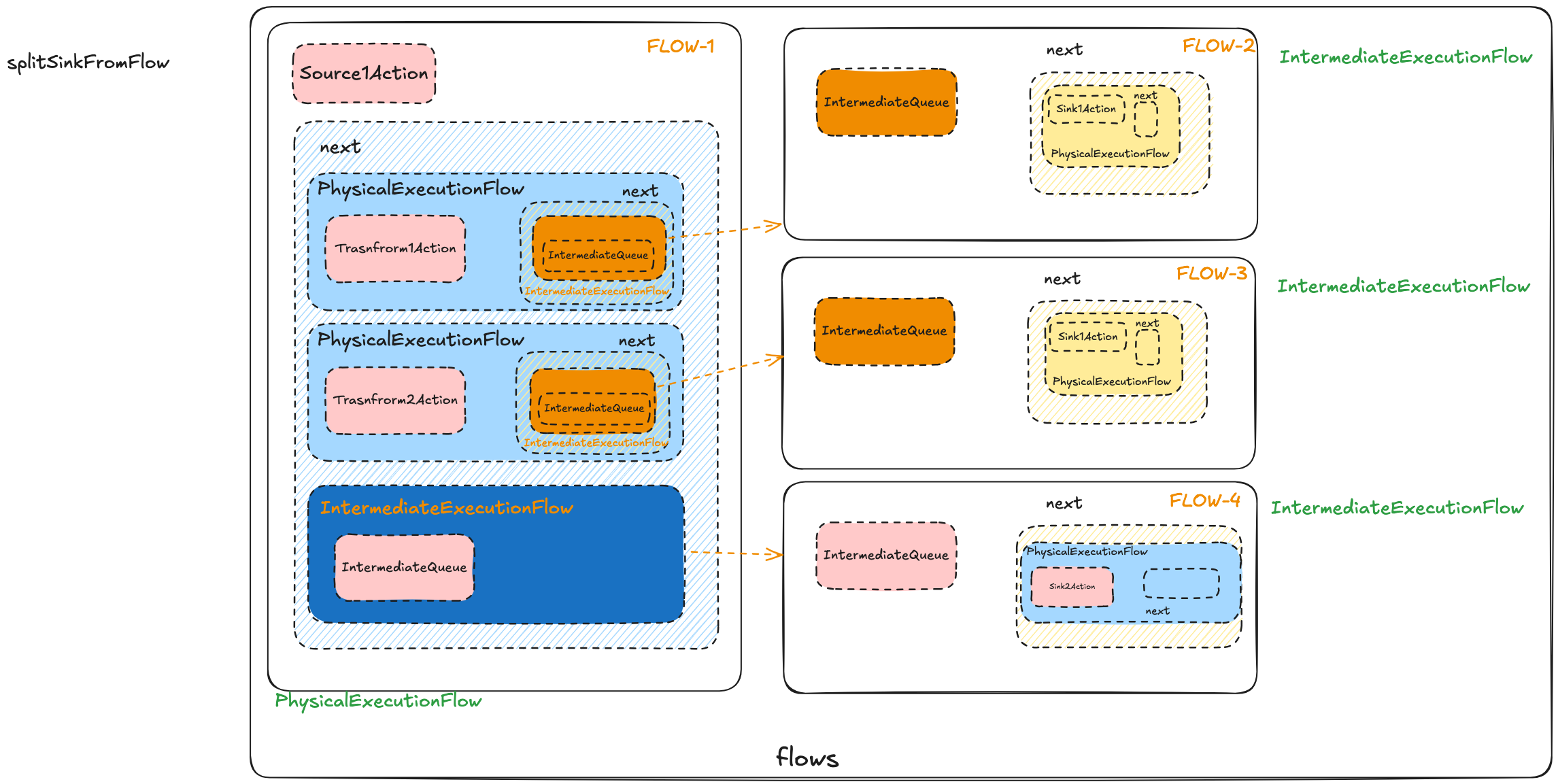

.getAddress());